I read an intriguingly funny article tonight. The reason it's so funny is that it's very applicable to me at the moment. My brother and I have been burning firewood for heat this winter in Portland, Oregon. We've actually obtained our fire wood from many different Craigslist advertisements, such as the one featured in this article's screen shot, and we have made some extra income selling our surpluses to people who are either in need of heat or just want the ambiance provided by a nice warm fire.

Seasoned wood does not in fact refer to seasoning the wood with pepper and salt and Italian spices although the idea does create some interesting mental pictures. For those who don't know, seasoned wood is wood that has been aged and naturally dried so that it will burn well. In order to be seasoned, most firewood must be aged for at least 1 to 2 years.

Although seasoned wood isn't seasoned in terms of teeming with lemon-pepper or garlic and oregano, burning the many different kinds of wood does indeed produce a plethora of different uniquely enticing and delicious smells. Black walnut is perhaps my favorite. Sit a piece in front of your fireplace just inches from the transfer of potential to kinetic energy. Watch the blue flames emanating from a few well-seasoned, dry chunks of maple as the walnut slowly heats up. After awhile, it smells like freshly baked bread and makes you want to go break out the Country Crock and dig in. We did that one night and the wood smelled so good I felt I could almost take a bite out of it!

With a fireplace, who needs Dish Network. It's all the entertainment I need.

Wednesday, December 17, 2008

Sunday, September 21, 2008

Dreamhost Hosting Special

I work with a client who uses Dreamhost as the hosting provider for a new web application. My client works with several other contractors besides myself. Some have been only temporary contracts while others have been more long-term. For the initial phase of development, all development was done on local servers, and there was only one hostname for the website.

One of the newest consultants spent just a small portion of his time in setting up some tools that would not only make development more efficient; but also, these tools will help prevent catastrophic mistakes that oftentimes can occur. Manual, repeated tasks are prone to error, and it's not a matter of if a failure occurs, but when will the failure occur.

The consultant set up several domain names for us to use in the deployment process. Previously, the site was deployed to the server under the www subdomain. While this is fine for code that has been tested on the hosting platform, it's not fine when deploying live. We now have a "testing" subdomain and a "stage" subdomain where "testing" is where untested code can be tested using a separate build of the website. "Stage", of course, mirrors whatever is deployed live and acts as a staging ground for final tests of changes to ensure that they are indeed production ready.

The consultant also set up Trac, which is an open-source wiki, bug tracking package, and project management platform all rolled into one web application. It integrates with the Subversion repository, which the consultant also setup and configured using Dreamhost's web panel.

Dreamhost offers these features as part of the standard hosting package, all for the low cost of $5.95 per month, depending on the length of your committment. Of all of the hosting providers that I've looked at, Dreamhost is the best for new startups looking to establish a web presence using PHP, Perl, Ruby, and some other popular programming languages. You'll never have to worry about running out of databases, as Dreamhost will supply you with an endless supply of MySql databases!

Some of the things that really stood out to me were things like the Jabber Chat server, a full Unix shell, the Debian Linux operating system, and IMAP access so you can download your email to a mail client. There is a one-click install feature for installing many popular packages, such as Wordpress, Moodle, and Joomla. In addition, through the shell you can install other applications, such as Trac!

To make this deal even sweeter, you can get unlimited disk space and unlimited bandwidth if you sign up now by clicking on the link in the left section on my website! This leaves lots of room for growth, and this perhaps may be the last hosting package you'll ever need!

The only catch, there are only 1111 slots left open for unlimited disk space and bandwidth, so sign up today!

Click here to learn more

One of the newest consultants spent just a small portion of his time in setting up some tools that would not only make development more efficient; but also, these tools will help prevent catastrophic mistakes that oftentimes can occur. Manual, repeated tasks are prone to error, and it's not a matter of if a failure occurs, but when will the failure occur.

The consultant set up several domain names for us to use in the deployment process. Previously, the site was deployed to the server under the www subdomain. While this is fine for code that has been tested on the hosting platform, it's not fine when deploying live. We now have a "testing" subdomain and a "stage" subdomain where "testing" is where untested code can be tested using a separate build of the website. "Stage", of course, mirrors whatever is deployed live and acts as a staging ground for final tests of changes to ensure that they are indeed production ready.

The consultant also set up Trac, which is an open-source wiki, bug tracking package, and project management platform all rolled into one web application. It integrates with the Subversion repository, which the consultant also setup and configured using Dreamhost's web panel.

Dreamhost offers these features as part of the standard hosting package, all for the low cost of $5.95 per month, depending on the length of your committment. Of all of the hosting providers that I've looked at, Dreamhost is the best for new startups looking to establish a web presence using PHP, Perl, Ruby, and some other popular programming languages. You'll never have to worry about running out of databases, as Dreamhost will supply you with an endless supply of MySql databases!

Some of the things that really stood out to me were things like the Jabber Chat server, a full Unix shell, the Debian Linux operating system, and IMAP access so you can download your email to a mail client. There is a one-click install feature for installing many popular packages, such as Wordpress, Moodle, and Joomla. In addition, through the shell you can install other applications, such as Trac!

To make this deal even sweeter, you can get unlimited disk space and unlimited bandwidth if you sign up now by clicking on the link in the left section on my website! This leaves lots of room for growth, and this perhaps may be the last hosting package you'll ever need!

The only catch, there are only 1111 slots left open for unlimited disk space and bandwidth, so sign up today!

Click here to learn more

Monday, September 1, 2008

Google Chrome Browser

While most of us spent our Labor Day weekend camping, barbecuing, or in my case, moving into a new home, the marketing team at Google was laboring away releasing marketing materials on what seems to be the next evolution of the web!

Google Chrome, a fully open source multi-process web browser, addresses many of the woes that most web surfers encounter on a daily basis. Instead of being concerned with browser lock ups and ensuring that we don't go over 35 to 40 tabs in Firefox for fear of the dreaded browser crash, we can rest assured knowing that the most we will ever have to worry about is a "tab crash".

What is a tab crash? According to the Google Chrome Comic book, each tab is launched as a separate process. What this means is that if there are N tabs open, there will be N JavaScript threads running, N copies of the global data structures, and a significantly reduced chance that a rogue JavaScript function will bring a user's web experience to a grinding halt.

Both Firefox and IE run as a single process. While IE does tout the advantage of running each tab in a different thread, the memory is still shared; and all tabs are still susceptible to crashes. In either case, the result is the same.

However, I am very interested to see this new browser in action. How impervious to crashes is it going to be? What is this going to mean for web development? Will we need more CSS and HTML magicians to step forward and deal with yet another browser hack? Or will Google Chrome follow web standards and allow the same ease of web application development as Firefox and Safari do?

One question that I have is in regards to add-ons. Will this new browser be as extensible as Firefox? Is there or will there eventually be a market for Google Chrome add-ons?

More importantly, is this the first step towards the Google Operating System?

I guess tomorrow we will find out.

Google Chrome, a fully open source multi-process web browser, addresses many of the woes that most web surfers encounter on a daily basis. Instead of being concerned with browser lock ups and ensuring that we don't go over 35 to 40 tabs in Firefox for fear of the dreaded browser crash, we can rest assured knowing that the most we will ever have to worry about is a "tab crash".

What is a tab crash? According to the Google Chrome Comic book, each tab is launched as a separate process. What this means is that if there are N tabs open, there will be N JavaScript threads running, N copies of the global data structures, and a significantly reduced chance that a rogue JavaScript function will bring a user's web experience to a grinding halt.

Both Firefox and IE run as a single process. While IE does tout the advantage of running each tab in a different thread, the memory is still shared; and all tabs are still susceptible to crashes. In either case, the result is the same.

However, I am very interested to see this new browser in action. How impervious to crashes is it going to be? What is this going to mean for web development? Will we need more CSS and HTML magicians to step forward and deal with yet another browser hack? Or will Google Chrome follow web standards and allow the same ease of web application development as Firefox and Safari do?

One question that I have is in regards to add-ons. Will this new browser be as extensible as Firefox? Is there or will there eventually be a market for Google Chrome add-ons?

More importantly, is this the first step towards the Google Operating System?

I guess tomorrow we will find out.

Monday, July 21, 2008

JavaScript and Java are Pass By Value

Proof that JavaScript and Java are Pass By Value

Pass by value and pass by reference can be daunting at times, and I too have been confused and frustrated at all of the babbling about whether or not Java is one or the other, that is, until I saw an article featuring the Litmus test. I've seen the Litmus test in college, but it didn't make sense completely until reading Scott Stanchfield's "Dammit" article.

Today I was reading Jeff Cogswell's article asserting that JavaScript is pass by value. The blog author is indeed correct in his assertions; however, there were many comments rebutting his arguments. I thought it would be best to support his arguments by applying the Litmus test to JavaScript to prove once and for all whether or not JavaScript is pass by value or pass by reference.

Java is clearly pass by value. Scott Stanchfield's statement says it best: "Objects are not passed by reference. A correct statement would be Object references are passed by value."

The JavaScript Litmus Test

I decided to apply the Litmus test to JavaScript and see what the results are. Here is what I have so far:

function doLitmusTest() {

var a1 = 5;

var a2 = 7;

swap(a1,a2);

alert(a1 + ","+a2);

}

function swap(a,b) {

var temp = a;

a = b;

b = temp;

}

window.addEventListener("load",function() { doLitmusTest(); },true);

The output is as follows:

5,7

The values were not swapped. JavaScript fails the Litmus test, as Java did. This means that JavaScript is also pass by value.

In Java, there is a workaround to being able to implement the swap function, and it also works in JavaScript:

function anotherTest() {

var obj1 = { value: 5 }; // JSON notation

var obj2 = { value: 7 }; // JSON notation

swapValue(obj1,obj2);

alert(obj1.value + "," + obj2.value);

}

function swapValue(objA,objB) {

var temp = objA.value;

objA.value = objB.value;

objB.value = temp;

}

window.addEventListener("load",function() { anotherTest(); },true);

The output is:

7,5

In Java, data can be "wrapped" in an object to allow the value to be changed. JavaScript behaves in the same manner. All values, both primitives and object references are passed by value. With primitives, changes we make to one copy of a variable do not affect changes to another copy.

The rule is the same with Object references. Objects are not passed in as parameters in Java or JavaScript, object references are passed as parameters. References point to a specific location in memory. Three references can all point to the same location, so if the data located at that spot in memory is changed, the change will be reflected for all of the references that point to that spot.

However, changes to the reference itself are not reflected. A rule of thumb to remember is that when implementing the swap method, you can only change data by calling setter and getter methods, or by accessing public properties. However, making changes to the object reference instruct the object reference to point to a different location. This is why swapValue worked as expected while swap did not.

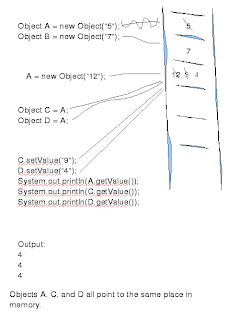

Below is a sequence of "visual art" to demonstrate the concepts visually:

The value of the object referenced by B is changed to a new value, but the object reference still points to the same location in memory.

For A, the "new" operator created new space in memory and instantiates a new object. The "12" is located in a different spot in memory.

B, on the other hand, has a value that was set using a setter method. Thus, the value has changed from "7" to "2" without destroying the original object.

Objects A, C, and D all point to the same place in memory. If a setter method is called on one of the object references, the value is changed in all 3 object references because they all point to the same location in memory.

A good analogy to use to explain this concept is "nicknames". For instance, my name is James, but people also refer to me by Jim. Additionally, I've been called "Mort" before as well. All three names point to the same object, me! In the example I've shown you, A, C, and D are all names of the same object.

Sunday, July 13, 2008

XSLT Transformations with XML Namespaces

As of the date of this article, there are currently not a lot of resources on the Internet regarding how to transform XML that contains namespaces. Yahoo's Weather Web Services API returns data in the form of RSS, and this data is formatted not only with elements and attributes, but also with namespaces.

I'm experimenting with the Yahoo Weather API for a potential project I may work on. Considering that there may be other Weather API's used, it makes more sense from an architectural design standpoint to tranform Yahoo's data into my own XML data structure. This ensures that if the client decides that he/she likes Google's Weather API, or the Fox News API, all we need to do is create a new XSLT stylesheet to convert the data into the format recognized by our server.

Here is part of the Yahoo Weather Data, taken from here.:

<?xml version="1.0" encoding="UTF-8" standalone="yes" ?>

<rss version="2.0" xmlns:yweather="http://xml.weather.yahoo.com/ns/rss/1.0" xmlns:geo="http://www.w3.org/2003/01/geo/wgs84_pos#">

<channel>

<title>Yahoo! Weather - Portland, OR</title>

<link>http://us.rd.yahoo.com/dailynews/rss/weather/Portland__OR/*http://weather.yahoo.com/forecast/97206_f.html</link>

<description>Yahoo! Weather for Portland, OR</description>

<language>en-us</language>

<lastBuildDate>Sat, 28 Jun 2008 4:53 pm PDT</lastBuildDate>

<ttl>60</ttl>

<yweather:location city="Portland" region="OR" country="US"/>

<yweather:units temperature="F" distance="mi" pressure="in" speed="mph"/>

<yweather:wind chill="101" direction="300" speed="10" />

<yweather:atmosphere humidity="22" visibility="10" pressure="29.82" rising="2" />

<yweather:astronomy sunrise="5:24 am" sunset="9:03 pm"/>

<image>

<title>Yahoo! Weather</title>

<width>142</width>

<height>18</height>

<link>http://weather.yahoo.com</link>

<url>http://l.yimg.com/us.yimg.com/i/us/nws/th/main_142b.gif</url>

</image>

<item>

<title>Conditions for Portland, OR at 4:53 pm PDT</title>

<geo:lat>45.52</geo:lat>

<geo:long>-122.68</geo:long>

<link>http://us.rd.yahoo.com/dailynews/rss/weather/Portland__OR/*http://weather.yahoo.com/forecast/97206_f.html</link>

<pubDate>Sat, 28 Jun 2008 4:53 pm PDT</pubDate>

<yweather:condition text="Fair" code="34" temp="101" date="Sat, 28 Jun 2008 4:53 pm PDT" />

<description><![CDATA[

<img src="http://l.yimg.com/us.yimg.com/i/us/we/52/34.gif"/><br />

<b>Current Conditions:</b><br />

Fair, 101 F<BR />

<BR /><b>Forecast:</b><BR />

Sat - Mostly Clear. High: 97 Low: 65<br />

Sun - Mostly Sunny. High: 91 Low: 66<br />

<br />

<a href="http://us.rd.yahoo.com/dailynews/rss/weather/Portland__OR/*http://weather.yahoo.com/forecast/USOR0275_f.html">Full Forecast at Yahoo! Weather</a><BR/>

(provided by The Weather Channel)<br/>

]]></description>

<yweather:forecast day="Sat" date="28 Jun 2008" low="65" high="97" text="Mostly Clear" code="33" />

<yweather:forecast day="Sun" date="29 Jun 2008" low="66" high="91" text="Mostly Sunny" code="34" />

<guid isPermaLink="false">97206_2008_06_28_16_53_PDT</guid>

</item>

</channel>

</rss><!-- api1.weather.sp1.yahoo.com compressed/chunked Sat Jun 28 17:19:32 PDT 2008 -->

Note that the temperature and other weather data is stored within elements that are part of the yweather namespace. The XSLT stylesheet below demonstrates how to obtain the temp attribute from the yweather:condition element:

<?xml version="1.0" encoding="ISO-8859-1"?>

<xsl:stylesheet version="1.0"

xmlns:xsl="http://www.w3.org/1999/XSL/Transform"

xmlns:yweather="http://xml.weather.yahoo.com/ns/rss/1.0">

<xsl:template match="/">

<html>

<body>

<h2>Weather</h2>

<table border="1">

<xsl:for-each select="rss/channel">

<tr>

<td>title</td>

<td class="title"><xsl:value-of select="title" /></td>

</tr>

<tr>

<td>link</td>

<td class="link"><xsl:value-of select="link" /></td>

</tr>

<tr>

<td>curCond</td>

<td class="curCond">

<xsl:value-of select="//yweather:condition/@text" />

</td>

</tr>

<tr>

<td>curTemp</td>

<td class="curTemp">

<xsl:value-of select="//yweather:condition/@temp" />

</td>

<td id="curTemp">

<xsl:value-of select="//yweather:condition/@temp" />

</td>

</tr>

<xsl:for-each select="//yweather:forecast">

<tr>

<td>forecast</td><td class="day">day: <xsl:value-of select="@day" /></td>

</tr>

<tr>

<td></td><td class="date">date: <xsl:value-of select="@date" /></td>

</tr>

<tr>

<td></td><td class="low">low: <xsl:value-of select="@low" /></td>

</tr>

<tr>

<td></td><td class="high">high: <xsl:value-of select="@high" /></td>

On another note, I've done XML conversions before the hard way: By parsing the XML with PHP or JSP and dynamically rebuilding the XML! While this would also work to solve my problem, using XSLT will allow me to keep the transformation layer separate from the business logic.

Although this was somewhat time consuming getting started with XSLT and converting XML with namespaces, I can already feel the weight being lifted off my mind knowing that changing my data sources won't involve troubleshooting PHP errors!

I'm experimenting with the Yahoo Weather API for a potential project I may work on. Considering that there may be other Weather API's used, it makes more sense from an architectural design standpoint to tranform Yahoo's data into my own XML data structure. This ensures that if the client decides that he/she likes Google's Weather API, or the Fox News API, all we need to do is create a new XSLT stylesheet to convert the data into the format recognized by our server.

Here is part of the Yahoo Weather Data, taken from here.:

<?xml version="1.0" encoding="UTF-8" standalone="yes" ?>

<rss version="2.0" xmlns:yweather="http://xml.weather.yahoo.com/ns/rss/1.0" xmlns:geo="http://www.w3.org/2003/01/geo/wgs84_pos#">

<channel>

<title>Yahoo! Weather - Portland, OR</title>

<link>http://us.rd.yahoo.com/dailynews/rss/weather/Portland__OR/*http://weather.yahoo.com/forecast/97206_f.html</link>

<description>Yahoo! Weather for Portland, OR</description>

<language>en-us</language>

<lastBuildDate>Sat, 28 Jun 2008 4:53 pm PDT</lastBuildDate>

<ttl>60</ttl>

<yweather:location city="Portland" region="OR" country="US"/>

<yweather:units temperature="F" distance="mi" pressure="in" speed="mph"/>

<yweather:wind chill="101" direction="300" speed="10" />

<yweather:atmosphere humidity="22" visibility="10" pressure="29.82" rising="2" />

<yweather:astronomy sunrise="5:24 am" sunset="9:03 pm"/>

<image>

<title>Yahoo! Weather</title>

<width>142</width>

<height>18</height>

<link>http://weather.yahoo.com</link>

<url>http://l.yimg.com/us.yimg.com/i/us/nws/th/main_142b.gif</url>

</image>

<item>

<title>Conditions for Portland, OR at 4:53 pm PDT</title>

<geo:lat>45.52</geo:lat>

<geo:long>-122.68</geo:long>

<link>http://us.rd.yahoo.com/dailynews/rss/weather/Portland__OR/*http://weather.yahoo.com/forecast/97206_f.html</link>

<pubDate>Sat, 28 Jun 2008 4:53 pm PDT</pubDate>

<yweather:condition text="Fair" code="34" temp="101" date="Sat, 28 Jun 2008 4:53 pm PDT" />

<description><![CDATA[

<img src="http://l.yimg.com/us.yimg.com/i/us/we/52/34.gif"/><br />

<b>Current Conditions:</b><br />

Fair, 101 F<BR />

<BR /><b>Forecast:</b><BR />

Sat - Mostly Clear. High: 97 Low: 65<br />

Sun - Mostly Sunny. High: 91 Low: 66<br />

<br />

<a href="http://us.rd.yahoo.com/dailynews/rss/weather/Portland__OR/*http://weather.yahoo.com/forecast/USOR0275_f.html">Full Forecast at Yahoo! Weather</a><BR/>

(provided by The Weather Channel)<br/>

]]></description>

<yweather:forecast day="Sat" date="28 Jun 2008" low="65" high="97" text="Mostly Clear" code="33" />

<yweather:forecast day="Sun" date="29 Jun 2008" low="66" high="91" text="Mostly Sunny" code="34" />

<guid isPermaLink="false">97206_2008_06_28_16_53_PDT</guid>

</item>

</channel>

</rss><!-- api1.weather.sp1.yahoo.com compressed/chunked Sat Jun 28 17:19:32 PDT 2008 -->

Note that the temperature and other weather data is stored within elements that are part of the yweather namespace. The XSLT stylesheet below demonstrates how to obtain the temp attribute from the yweather:condition element:

<?xml version="1.0" encoding="ISO-8859-1"?>

<xsl:stylesheet version="1.0"

xmlns:xsl="http://www.w3.org/1999/XSL/Transform"

xmlns:yweather="http://xml.weather.yahoo.com/ns/rss/1.0">

<xsl:template match="/">

<html>

<body>

<h2>Weather</h2>

<table border="1">

<xsl:for-each select="rss/channel">

<tr>

<td>title</td>

<td class="title"><xsl:value-of select="title" /></td>

</tr>

<tr>

<td>link</td>

<td class="link"><xsl:value-of select="link" /></td>

</tr>

<tr>

<td>curCond</td>

<td class="curCond">

<xsl:value-of select="//yweather:condition/@text" />

</td>

</tr>

<tr>

<td>curTemp</td>

<td class="curTemp">

<xsl:value-of select="//yweather:condition/@temp" />

</td>

<td id="curTemp">

<xsl:value-of select="//yweather:condition/@temp" />

</td>

</tr>

<xsl:for-each select="//yweather:forecast">

<tr>

<td>forecast</td><td class="day">day: <xsl:value-of select="@day" /></td>

</tr>

<tr>

<td></td><td class="date">date: <xsl:value-of select="@date" /></td>

</tr>

<tr>

<td></td><td class="low">low: <xsl:value-of select="@low" /></td>

</tr>

<tr>

<td></td><td class="high">high: <xsl:value-of select="@high" /></td>

On another note, I've done XML conversions before the hard way: By parsing the XML with PHP or JSP and dynamically rebuilding the XML! While this would also work to solve my problem, using XSLT will allow me to keep the transformation layer separate from the business logic.

Although this was somewhat time consuming getting started with XSLT and converting XML with namespaces, I can already feel the weight being lifted off my mind knowing that changing my data sources won't involve troubleshooting PHP errors!

Saturday, July 5, 2008

Share VMWare Image between Dual-Boot Operating Systems

How to Share a VMWare Image Between Windows XP and Ubuntu 8.04

Sharing a VMWare Image in a dual-boot setup involves the following prerequisites:

- One ntfs partition with Windows XP Professional installed

- One ext3 partition with Ubuntu 8.04 installed

- One fat32 partition, shared between Ubuntu and Windows

- At least 1GB of RAM

- VMPlayer installed in both Windows XP and Ubuntu 8.04

- VMImages, stored on the fat32 partition

Stored on the fat32 partition is one Windows XP Home VMImage. I don't have VMWare Workstation. So you may be wondering how I was able to create my own Windows XP Home image. I'll get to that later.

I first boot into the Windows XP Home installation using the Windows XP Pro host. I moved some files around, made some changes to some files. Then shutdown the VMImage. I reboot the computer into Ubuntu 8.04, started VMPlayer, and boot into the very same Windows XP Home image I was just working with on the XP host. My changes were all there!

When I first started up the VMImage in Ubuntu, the system asked me if I moved or copied the VMImage. Well, I didn't move it, but I didn't copy it either. Since I wasn't sure what option to pick, I selected the "copied" option recommended by VMPlayer if you don't know whether or not you moved or copied the image. This will happen again when I switch back to the Windows host. So far, selecting "copied" has not negatively affected performance.

The first time booting the image in the new host, networking didn't immediately work. Wait a few minutes and it may fix itself. You can also go into VMWare tools, if it's installed, and make sure your Ethernet card is enabled. So far, I've had no issues with networking.

I'm very impressed with VMPlayer! I can run Windows XP in a VMimage in both Windows and Linux. It's stable, fast, and allows me to work on Windows projects on both Ubuntu and Windows hosts.

Using Microsoft Virtual PC to Create Image and Convert with VMWare Converter

Previously, I was using Microsoft Virtual PC 2007, which wasn't cross-compatible with Linux. To answer the above unanswered question of how I created a Windows XP Home VMWare image without VMWare Workstation, I used VMWare Comverter to convert my Windows XP Home Virtual PC image to VMWare. In Virtual PC, it took a long time to boot up. Once booted, response was slow and unusable. I quit using the Virtual PC image long ago because it was simply too slow.

In VMPlayer, I feel that the Guest operating system runs smoother than the Windows XP host itself! I'd recommend this setup for anyone who wants to run Windows from Linux and Windows from Windows. Additionally, although Virtual PC is slow, it's a great tool to allow you to create a Virtual image and then convert it to VMWare using the VMWare Converter.

UPDATE: I've been using this setup for over a month and I'm still happy with it!

Labels:

Cross-platform,

Linux,

Operating systems,

Ubuntu,

Windows

Saturday, June 28, 2008

Cisco VPN on Ubuntu 8.04

Deadlines approach fast! In times like these, it's oh so helpful to be able to dig a tunnel from your office to your home so that you have access to the tools that you need! Right now you have images of me under the ground with a shovel slowly scooping through layers of packed dirt. I'm not using shovels. I didn't break a sweat, and I didn't have to use a single gallon of gasoline!

I installed Cisco VPN on Ubuntu 8.04. Essentially, I did create a tunnel from my office to my home, expanding the bubble that is our corporate network to surround my makeshift home office. It was both easier than I thought and harder than I thought. It was easier thanks to the wonderful community of Ubuntu users, yet harder because it required kernel patches and Google searches and a little patience and persistence.

I found a link to fix the Cisco VPN Installation Issue with Ubuntu 8.04 Hardy Heron. There are in fact several of them, which helps to confirm that the steps you follow won't cause your refrigerator to start leaking. A poster in the forum also described my exact problem that I was facing. He was using an older version of the VPN Client, as I was. And according to another poster, it wasn't compatible with the new kernel. He supplies the reader with a link to the patch, which can also be found all over the net.

After pulling our friendly IT Specialist from his episode of Babylon 5, I was able to obtain the latest version of the VPN Client. It was in fact the same version listed in the forum that the poster recommended. After applying the patch to the new version, all was good. Well, almost. I couldn't get the VPN to start. It was complaining that the profile couldn't be found. What it should have done was laughed at me, called me an idiot, and told me to put the profile configuration file in the correct directory! Then it should have shook it's head and told me to try reading the usage instructions more carefully and to omit using the .pcf file extension in the command I was using.

After reading further in the forum, I found that the Ubuntu community was nice enough to remind me of this fact, without laughing at me, and without the shake of a head.

Easy enough. I started the VPN Client and am now on the way to meeting my deadline, all without leaving the house! In addition, I can now login on nights and future weekends, workaholic that I am, and be able to get more work done!

I must say I'm pretty impressed with Ubuntu 8.04! I'm glad I tried it out. I haven't boot into Windows XP Pro in over a month now! And so far there hasn't been a need. Of course, I do have VMPlayer installed on here with a Windows XP Home VMImage, and am kind of cheating, but that's another adventure!

I installed Cisco VPN on Ubuntu 8.04. Essentially, I did create a tunnel from my office to my home, expanding the bubble that is our corporate network to surround my makeshift home office. It was both easier than I thought and harder than I thought. It was easier thanks to the wonderful community of Ubuntu users, yet harder because it required kernel patches and Google searches and a little patience and persistence.

I found a link to fix the Cisco VPN Installation Issue with Ubuntu 8.04 Hardy Heron. There are in fact several of them, which helps to confirm that the steps you follow won't cause your refrigerator to start leaking. A poster in the forum also described my exact problem that I was facing. He was using an older version of the VPN Client, as I was. And according to another poster, it wasn't compatible with the new kernel. He supplies the reader with a link to the patch, which can also be found all over the net.

After pulling our friendly IT Specialist from his episode of Babylon 5, I was able to obtain the latest version of the VPN Client. It was in fact the same version listed in the forum that the poster recommended. After applying the patch to the new version, all was good. Well, almost. I couldn't get the VPN to start. It was complaining that the profile couldn't be found. What it should have done was laughed at me, called me an idiot, and told me to put the profile configuration file in the correct directory! Then it should have shook it's head and told me to try reading the usage instructions more carefully and to omit using the .pcf file extension in the command I was using.

After reading further in the forum, I found that the Ubuntu community was nice enough to remind me of this fact, without laughing at me, and without the shake of a head.

Easy enough. I started the VPN Client and am now on the way to meeting my deadline, all without leaving the house! In addition, I can now login on nights and future weekends, workaholic that I am, and be able to get more work done!

I must say I'm pretty impressed with Ubuntu 8.04! I'm glad I tried it out. I haven't boot into Windows XP Pro in over a month now! And so far there hasn't been a need. Of course, I do have VMPlayer installed on here with a Windows XP Home VMImage, and am kind of cheating, but that's another adventure!

Labels:

Collaboration,

Linux,

Problem Solving,

Ubuntu,

VPN

Friday, June 27, 2008

HTML Multiple Reply Signatures for Gmail 1.0.2.3

Don't get too excited. The Multiple HTML Reply Signatures Extension 1.0.2.3 update doesn't include too many new features. I got rid of the red update link that appears in the toolbar. I don't think it was working correctly. In addition, it took up valuable browser real estate. Bad idea.

I did try to see what would happen in Firefox 3. Sadly, the Firefox Extension doesn't work in Mozilla's newest and fastest browser. The problem isn't the editor or even the signature injection. It's the module that reads data from the XML file. This was a hack anyway. I could probably get by with using the Firefox RDF API or perhaps store the signatures using the preferences system.

The good news is, converting to Firefox 3 wouldn't be hard. The bad news is that I don't have time to do it. We just need to fix the mechanism that reads signatures from the XML data file! That's it! Everything else appeared to work when I tested it!

Anyone know any JavaScript who is bored? HTML Multiple Reply Signatures for Gmail is licensed under the GPL! You can crack open the XPI and try to fix this yourself! I'll even tell you what files to modify if you email me!

I did start working on a version for Gmail 2. Interestingly enough, there aren't many solutions out there for Gmail 2, yet there was tons of competition for Gmail 1. I have a prototype that Susan Hemmersmeier has happily tested for me. It's very buggy though. Too buggy to put up on the blog, and too buggy for her to use.

Susan asked me when this will be finished. I hate to keep promising that "I'll eventually get to it.". You see, thanks to this blog, I landed some contract work! It's a lot of fun, but it takes up a lot of time. As a result, it would appear that HTML Multiple Reply Signatures for Gmail may be a (sniff...) dying project...

Do you have the skills to resurrect it from it's ashes? Let me know! I can definitely provide the moral support and answer technical questions, I just can't devote programming time to it at the moment.

James

I did try to see what would happen in Firefox 3. Sadly, the Firefox Extension doesn't work in Mozilla's newest and fastest browser. The problem isn't the editor or even the signature injection. It's the module that reads data from the XML file. This was a hack anyway. I could probably get by with using the Firefox RDF API or perhaps store the signatures using the preferences system.

The good news is, converting to Firefox 3 wouldn't be hard. The bad news is that I don't have time to do it. We just need to fix the mechanism that reads signatures from the XML data file! That's it! Everything else appeared to work when I tested it!

Anyone know any JavaScript who is bored? HTML Multiple Reply Signatures for Gmail is licensed under the GPL! You can crack open the XPI and try to fix this yourself! I'll even tell you what files to modify if you email me!

I did start working on a version for Gmail 2. Interestingly enough, there aren't many solutions out there for Gmail 2, yet there was tons of competition for Gmail 1. I have a prototype that Susan Hemmersmeier has happily tested for me. It's very buggy though. Too buggy to put up on the blog, and too buggy for her to use.

Susan asked me when this will be finished. I hate to keep promising that "I'll eventually get to it.". You see, thanks to this blog, I landed some contract work! It's a lot of fun, but it takes up a lot of time. As a result, it would appear that HTML Multiple Reply Signatures for Gmail may be a (sniff...) dying project...

Do you have the skills to resurrect it from it's ashes? Let me know! I can definitely provide the moral support and answer technical questions, I just can't devote programming time to it at the moment.

James

Labels:

Collaboration,

Firefox development,

Gmail,

Greasemonkey,

HTML Signatures,

JavaScript,

XUL

Tuesday, June 10, 2008

getElementByAttribute

I don't use many JavaScript libraries, I prefer to write my own implementations of code that I need so that I don't have to import the entire library (although the example I am referring to in this post was not written by me). With Java, the compiler weeds out things you don't use, but the browser doesn't. There is really no mainstream mechanism for the browser to say "Only import method X".

I've used Taconite and DWR for a current project, but as a general rule I've found that for what I do, most libraries are limited to just solving one part of the problem. Taconite is great for AJAX support and being able to add XHTML to the DOM while still maintaining a readable XHTML file so you don't have to wrap everything in DOM methods. However, it doesn't cut it with cross-domain issues. Similarly, DWR is great for making AJAX calls by invoking a JavaScript wrapper with the same name as your Java class method, but it suffers from the same limitation.

I'll write more on this topic later, but the main purpose of this post is to identify the source of some code I found on Mahesh Lambe's blog

Mahesh obviously paid attention in school! The getElementByAttribute function that he wrote uses two inner functions and recursion to search for an element that contains the attribute you specify and the value you specify. The search consist of making a recursive call by diving down through all of the child elements in one call while checking the sibling in another call. This results in a thorough check down the DOM tree. It reminds me vaguely of something I may have done in Lisp with Fibonacci numbers in college.

This is one of the reasons that I love JavaScript. I just don't come across code like this in Java. With JavaScript, the code seems more true to the spirit of Computer Science, while still representing the language of interactive web applications.

I've used Taconite and DWR for a current project, but as a general rule I've found that for what I do, most libraries are limited to just solving one part of the problem. Taconite is great for AJAX support and being able to add XHTML to the DOM while still maintaining a readable XHTML file so you don't have to wrap everything in DOM methods. However, it doesn't cut it with cross-domain issues. Similarly, DWR is great for making AJAX calls by invoking a JavaScript wrapper with the same name as your Java class method, but it suffers from the same limitation.

I'll write more on this topic later, but the main purpose of this post is to identify the source of some code I found on Mahesh Lambe's blog

Mahesh obviously paid attention in school! The getElementByAttribute function that he wrote uses two inner functions and recursion to search for an element that contains the attribute you specify and the value you specify. The search consist of making a recursive call by diving down through all of the child elements in one call while checking the sibling in another call. This results in a thorough check down the DOM tree. It reminds me vaguely of something I may have done in Lisp with Fibonacci numbers in college.

This is one of the reasons that I love JavaScript. I just don't come across code like this in Java. With JavaScript, the code seems more true to the spirit of Computer Science, while still representing the language of interactive web applications.

Sunday, May 25, 2008

Open Source Infections

This article is in response to a comment left on this article.

Below is an excerpt of the GPL from http://www.gnu.org/licenses/gpl.html:

When we speak of free software, we are referring to freedom, not price. Our General Public Licenses are designed to make sure that you have the freedom to distribute copies of free software (and charge for them if you wish), that you receive source code or can get it if you want it, that you can change the software or use pieces of it in new free programs, and that you know you can do these things.

The GPL license states that the purpose is to give developers more freedom to use the source code; however, it's not truly free! Here is another excerpt from the GNU FAQ:

If I add a module to a GPL-covered program, do I have to use the GPL as the license for my module?

The GPL says that the whole combined program has to be released under the GPL. So your module has to be available for use under the GPL.

But you can give additional permission for the use of your code. You can, if you wish, release your program under a license which is more lax than the GPL but compatible with the GPL. The license list page gives a partial list of GPL-compatible licenses.

You have a GPL'ed program that I'd like to link with my code to build a proprietary program. Does the fact that I link with your program mean I have to GPL my program?

Not exactly. It means you must release your program under a license compatible with the GPL (more precisely, compatible with one or more GPL versions accepted by all the rest of the code in the combination that you link). The combination itself is then available under those GPL versions.

If so, is there any chance I could get a license of your program under the Lesser GPL?

You can ask, but most authors will stand firm and say no. The idea of the GPL is that if you want to include our code in your program, your program must also be free software. It is supposed to put pressure on you to release your program in a way that makes it part of our community.

You always have the legal alternative of not using our code.

The term "infect" perfectly describes what some open source licenses can do to code. Specifically, any code one writes that uses a GPL library can become "blanketed" by the GPL license. The author of the comment claims that this is FUD. It's not FUD, it's reality. Sure, open source software is great, and I have even written open source software myself. However, I can't use this code in a proprietary project because the license would make the proprietary project non-proprietary. The analogy of an infection paints a perfect picture regarding how the license would spread from the open source library to the proprietary code.

This isn't to say that all open source licenses are bad. It's important to differentiate between the GPL, LGPL, Apache License, MIT License, and other public licenses. To really label a license as giving developers the freedom to use the software however they want, one would need to look at the LGPL, Apache Commons, or another license that allows open source software to be integrated into a proprietary application.

While there is nothing wrong with the GPL, it's important to understand that there is a time and a place to use this license, and that GPL-licensed code may not be good for every project.

Below is an excerpt of the GPL from http://www.gnu.org/licenses/gpl.html:

When we speak of free software, we are referring to freedom, not price. Our General Public Licenses are designed to make sure that you have the freedom to distribute copies of free software (and charge for them if you wish), that you receive source code or can get it if you want it, that you can change the software or use pieces of it in new free programs, and that you know you can do these things.

The GPL license states that the purpose is to give developers more freedom to use the source code; however, it's not truly free! Here is another excerpt from the GNU FAQ:

If I add a module to a GPL-covered program, do I have to use the GPL as the license for my module?

The GPL says that the whole combined program has to be released under the GPL. So your module has to be available for use under the GPL.

But you can give additional permission for the use of your code. You can, if you wish, release your program under a license which is more lax than the GPL but compatible with the GPL. The license list page gives a partial list of GPL-compatible licenses.

You have a GPL'ed program that I'd like to link with my code to build a proprietary program. Does the fact that I link with your program mean I have to GPL my program?

Not exactly. It means you must release your program under a license compatible with the GPL (more precisely, compatible with one or more GPL versions accepted by all the rest of the code in the combination that you link). The combination itself is then available under those GPL versions.

If so, is there any chance I could get a license of your program under the Lesser GPL?

You can ask, but most authors will stand firm and say no. The idea of the GPL is that if you want to include our code in your program, your program must also be free software. It is supposed to put pressure on you to release your program in a way that makes it part of our community.

You always have the legal alternative of not using our code.

The term "infect" perfectly describes what some open source licenses can do to code. Specifically, any code one writes that uses a GPL library can become "blanketed" by the GPL license. The author of the comment claims that this is FUD. It's not FUD, it's reality. Sure, open source software is great, and I have even written open source software myself. However, I can't use this code in a proprietary project because the license would make the proprietary project non-proprietary. The analogy of an infection paints a perfect picture regarding how the license would spread from the open source library to the proprietary code.

This isn't to say that all open source licenses are bad. It's important to differentiate between the GPL, LGPL, Apache License, MIT License, and other public licenses. To really label a license as giving developers the freedom to use the software however they want, one would need to look at the LGPL, Apache Commons, or another license that allows open source software to be integrated into a proprietary application.

While there is nothing wrong with the GPL, it's important to understand that there is a time and a place to use this license, and that GPL-licensed code may not be good for every project.

Labels:

Business,

Collaboration,

Open Source Licensing

Saturday, May 10, 2008

Open Source JavaScript Compressor

Are you concerned about having your Firefox Extension JavaScript code compromised? XPI files can be extracted and code can be easily viewed. One solution that works very well for many organizations that wish to keep their JavaScript code secret is to use a JavaScript obfuscator.

Also known as a script compiler or script compressor, an obfuscator takes human-readable JavaScript code and converts it into text that is virtually impossible for humans to process. When you're ready to deploy your product live, your developers can "obfuscate" a version of the code for distribution and maintain the original human-readable version for continued maintenance and development.

In addition to making it difficult to reverse-engineer, compressed JavaScript files are generally 40% to 60% smaller than their aesthetically pleasing human-readable counterparts, as a result of comment, whitespace, and line break removal.

http://www.javascript-source.com/

Please see the above link for a quick example of the difference between a human-readable JavaScript function and an obfucscated function. I wouldn't recommend purchasing this version though as there are open source versions out there that will accomplish the same goal.

http://javascriptcompressor.com/

This version is Dean Edward's Packer. The problem with this is that the website has a decoder. This kind of defeats the purpose of obfuscation, and I would recommend it only for compression and not obfuscation.

These tools appear to be a great way to keep proprietary JavaScript code from falling into the wrong hands. Obfuscation is not prefect or foolproof, but consider this question: Is a thief more likely to snoop around in a car with unlocked doors or one that is securely locked?

Here are some links to free or open source obfuscators. All three work from the command line:

- YUI Compressor

- ObfuscateJS JavaScript Obfuscator

- JSO (JavaScript Obfuscator)

Also known as a script compiler or script compressor, an obfuscator takes human-readable JavaScript code and converts it into text that is virtually impossible for humans to process. When you're ready to deploy your product live, your developers can "obfuscate" a version of the code for distribution and maintain the original human-readable version for continued maintenance and development.

In addition to making it difficult to reverse-engineer, compressed JavaScript files are generally 40% to 60% smaller than their aesthetically pleasing human-readable counterparts, as a result of comment, whitespace, and line break removal.

http://www.javascript-source.com/

Please see the above link for a quick example of the difference between a human-readable JavaScript function and an obfucscated function. I wouldn't recommend purchasing this version though as there are open source versions out there that will accomplish the same goal.

http://javascriptcompressor.com/

This version is Dean Edward's Packer. The problem with this is that the website has a decoder. This kind of defeats the purpose of obfuscation, and I would recommend it only for compression and not obfuscation.

These tools appear to be a great way to keep proprietary JavaScript code from falling into the wrong hands. Obfuscation is not prefect or foolproof, but consider this question: Is a thief more likely to snoop around in a car with unlocked doors or one that is securely locked?

Here are some links to free or open source obfuscators. All three work from the command line:

- YUI Compressor

- ObfuscateJS JavaScript Obfuscator

- JSO (JavaScript Obfuscator)

Saturday, April 5, 2008

Model View Squared Controller

At my place of work, Model View Controller is a common architectural pattern used as the foundation for the applications that we develop and maintain. MVC is a common pattern that can be seen in just about any software industry, from Agile development shops to those that follow the more traditional models of development.

As Computer Scientists, we often look for ways to solve a problem not once, but for N cases. Some really smart people at the Apache Foundation and SpringSource have designed and implemented solutions for Java that lay the foundation or "framework" for quickly and efficiently starting the development of an MVC application.

With both Struts and Spring, a request is sent from a browser to a servlet container, such as Tomcat or Jetty. The container hands off the request to a servlet declared in an XML configuration file. The servlet processes the request and hands it off to a controller. The controller, typically an Action subclass in Struts or a Controller subclass in Spring, makes calls to the model to retrieve data from the data source, manipulate that data, and pass it back to the controller. Afterwards, the result is forwarded to the view. In Struts, an ActionForward is returned by calling the mapping.findForward method and passing in a String that maps to a JSP page declared in the configuration file. In Spring, a ModelAndView object is instantiated with the JSP filename as an argument.

This particular pattern is well-known for decoupling the business logic from the user interface, the user interface from the controller, and the controller from the business logic. The advantage is that the view can be modified, maintained, or completely replaced, independent of the rest of the system.

Well, almost....

If you want to change the view, it's still a development issue. You still have to deploy a new Web Application Archive, or WAR file for short. You still have to test the application, as other developmental changes could affect the behavior of the system.

Unless you break the application up into completely separate modules that all exist outside the application....

Velocity, a templating language developed by the Apache Foundation, is very similar to Java Server Pages (JSP), except the Velocity files can be hosted outside the WAR file, on a completely different server, completely independent of the application.

Picture an enterprise-level reporting tool designed to be hosted by an application service provider. Imagine that there are thousands of clients who use this system and who regularly depend on the functionality. If you're a project manager for this reporting tool and you want to allow all of your clients to customize and skin the user interface without needing to involve your developers, then you need the Velocity Advantage!

Even with MVC, you may have a few JSP pages that create a tightly coupled system where a change to the HTML structure for one client will affect thousands, perhaps with disastrous results. While the number of pages is small, it may take a lot of work to make them all work together for each logical case.

On the other hand, you could have N JSP pages for N clients. This means that deploying a new feature means that you will need to modify N JSP pages.

No matter what solution you use, the fact remains that updating the user interface becomes a development issue that involves a complete development, testing, and deployment cycle, as well as the possibility of either introducing new bugs into the system or creating a situation where you require intense, time-consuming CVS management. Being organized takes time.

But using Velocity, these JSP page equivalents could be stored on the client's servers, or an external server that you maintain that is specifically dedicated to hosting these view components. Suppose you then have a configuration file where you can store the location of the view for each client, kind of like struts-config but better; it exists outside the WAR file! And suppose the client has control over which view they use!

Sure, with Struts and Spring, the configuration exists in an XML file outside of the codebase, but you still have to pack a new WAR file and possibly restart your servlet container when making changes to these files. This, of course, equates to downtime!

Here is where those smart people at the Apache Foundation failed to completely solve this problem. (For the record, the developers of Struts are extremely bright problem solvers who have made significant contributions to the development community. These contributions have reduced the cost of development significantly in many J2EE environments, and without them, I would probably still be sorting through a mess of code trying to figure out how to write a controller! Additionally, I've not seen a container/framework yet that solves this problem in the manner that I propose.) What's the point of externalizing all of my configuration if changing it still requires me to disrupt my production systems? Any changes made to web.xml, server.xml, struts-config.xml, tiles-defs.xml, or any other configuration file requires a servlet container restart, in most cases.

And this is exactly the type of problem that would make the above scenario fail with a framework such as Struts, or Spring, or WebWork, or Struts 2.

The framework can of course still be used, just not with the struts-config.XML file. To allow clients to modify their HTML or CSS or change the view that they see, they have to be able to access your own customized, instantly reloadable configuration schema, not the framework schema. You can use an XML file on your server or even a database, as long as changes to the data are instantly recognizable.

This is the type of framework that I want to develop. I'll call it Model View Squared Controller. It's too long though. I need a better name. The concept is that your view would consist of a single JSP page, but all it would do is output data from a bean or even the HttpServletRequest object. The bean would be populated in the controller with the view template retrieved from outside the application. The view would be created as a Velocity object, processed in the controller, and then forwarded to the JSP page.

Essentially, there are two "views"; one is part of the application and the other plugs into this view. The JSP page within the WAR file does nothing except render the data, while the other view -- the plugged-in view -- is actually retrieved from a remote data source and assembled in the controller. It's almost as if your view becomes a piece of data. It actually is data!

It's a tough concept to ponder. "My view is data, you say? I thought the model was the data?". Well, it is. But the view just happens to be something that we retrieve from an external data source, whether it be a remote server as a file, or as a template stored in a database.

Forms can be data. I don't mean the data that is entered in the forms, I mean the forms themselves! In your reporting tool, clients want to be able to use different forms with different field names and values. If your developers are smart, they can design a database schema that is abstract and extensible, one where field names aren't column names, but pieces of data themselves.

This is also the answer to my Senior Design project! I designed a system for allowing health club owners to add exercises to the system so that their members could record data for each exercise.

Since each exercise is different, there are different data fields for each type of exercise. These things can't be hard-coded because they are data. Not data that the member would see, but data that the client, the club owner or manager would see! Once again, the form fields are data!

Same with the reporting tool! Each client will have their own idea of what data they want their employees or customers to be able to enter. Therefore, you absolutely must solve the problem once and only once! Otherwise, you'll be scrambling to reinvent the wheel for each new customer that knocks on the door.

I'm going to continue to write more on this subject, as I feel that the concept of a model generated view, view view, or whatever I decide to call it, is oftentimes overlooked.

As Computer Scientists, we often look for ways to solve a problem not once, but for N cases. Some really smart people at the Apache Foundation and SpringSource have designed and implemented solutions for Java that lay the foundation or "framework" for quickly and efficiently starting the development of an MVC application.

MVC Framework Flow in Struts and Spring

With both Struts and Spring, a request is sent from a browser to a servlet container, such as Tomcat or Jetty. The container hands off the request to a servlet declared in an XML configuration file. The servlet processes the request and hands it off to a controller. The controller, typically an Action subclass in Struts or a Controller subclass in Spring, makes calls to the model to retrieve data from the data source, manipulate that data, and pass it back to the controller. Afterwards, the result is forwarded to the view. In Struts, an ActionForward is returned by calling the mapping.findForward method and passing in a String that maps to a JSP page declared in the configuration file. In Spring, a ModelAndView object is instantiated with the JSP filename as an argument.

Advantages and Disadvantages of Struts and Spring

This particular pattern is well-known for decoupling the business logic from the user interface, the user interface from the controller, and the controller from the business logic. The advantage is that the view can be modified, maintained, or completely replaced, independent of the rest of the system.

Well, almost....

If you want to change the view, it's still a development issue. You still have to deploy a new Web Application Archive, or WAR file for short. You still have to test the application, as other developmental changes could affect the behavior of the system.

Unless you break the application up into completely separate modules that all exist outside the application....

Velocity, a templating language developed by the Apache Foundation, is very similar to Java Server Pages (JSP), except the Velocity files can be hosted outside the WAR file, on a completely different server, completely independent of the application.

The Velocity Advantage

Picture an enterprise-level reporting tool designed to be hosted by an application service provider. Imagine that there are thousands of clients who use this system and who regularly depend on the functionality. If you're a project manager for this reporting tool and you want to allow all of your clients to customize and skin the user interface without needing to involve your developers, then you need the Velocity Advantage!

The JSP Disadvantages

Even with MVC, you may have a few JSP pages that create a tightly coupled system where a change to the HTML structure for one client will affect thousands, perhaps with disastrous results. While the number of pages is small, it may take a lot of work to make them all work together for each logical case.

On the other hand, you could have N JSP pages for N clients. This means that deploying a new feature means that you will need to modify N JSP pages.

No matter what solution you use, the fact remains that updating the user interface becomes a development issue that involves a complete development, testing, and deployment cycle, as well as the possibility of either introducing new bugs into the system or creating a situation where you require intense, time-consuming CVS management. Being organized takes time.

But using Velocity, these JSP page equivalents could be stored on the client's servers, or an external server that you maintain that is specifically dedicated to hosting these view components. Suppose you then have a configuration file where you can store the location of the view for each client, kind of like struts-config but better; it exists outside the WAR file! And suppose the client has control over which view they use!

Sure, with Struts and Spring, the configuration exists in an XML file outside of the codebase, but you still have to pack a new WAR file and possibly restart your servlet container when making changes to these files. This, of course, equates to downtime!

Here is where those smart people at the Apache Foundation failed to completely solve this problem. (For the record, the developers of Struts are extremely bright problem solvers who have made significant contributions to the development community. These contributions have reduced the cost of development significantly in many J2EE environments, and without them, I would probably still be sorting through a mess of code trying to figure out how to write a controller! Additionally, I've not seen a container/framework yet that solves this problem in the manner that I propose.) What's the point of externalizing all of my configuration if changing it still requires me to disrupt my production systems? Any changes made to web.xml, server.xml, struts-config.xml, tiles-defs.xml, or any other configuration file requires a servlet container restart, in most cases.

And this is exactly the type of problem that would make the above scenario fail with a framework such as Struts, or Spring, or WebWork, or Struts 2.

Model View Squared Controller

The framework can of course still be used, just not with the struts-config.XML file. To allow clients to modify their HTML or CSS or change the view that they see, they have to be able to access your own customized, instantly reloadable configuration schema, not the framework schema. You can use an XML file on your server or even a database, as long as changes to the data are instantly recognizable.

This is the type of framework that I want to develop. I'll call it Model View Squared Controller. It's too long though. I need a better name. The concept is that your view would consist of a single JSP page, but all it would do is output data from a bean or even the HttpServletRequest object. The bean would be populated in the controller with the view template retrieved from outside the application. The view would be created as a Velocity object, processed in the controller, and then forwarded to the JSP page.

Essentially, there are two "views"; one is part of the application and the other plugs into this view. The JSP page within the WAR file does nothing except render the data, while the other view -- the plugged-in view -- is actually retrieved from a remote data source and assembled in the controller. It's almost as if your view becomes a piece of data. It actually is data!

It's a tough concept to ponder. "My view is data, you say? I thought the model was the data?". Well, it is. But the view just happens to be something that we retrieve from an external data source, whether it be a remote server as a file, or as a template stored in a database.

Forms can be data. I don't mean the data that is entered in the forms, I mean the forms themselves! In your reporting tool, clients want to be able to use different forms with different field names and values. If your developers are smart, they can design a database schema that is abstract and extensible, one where field names aren't column names, but pieces of data themselves.

Fitness Tracker

This is also the answer to my Senior Design project! I designed a system for allowing health club owners to add exercises to the system so that their members could record data for each exercise.

Since each exercise is different, there are different data fields for each type of exercise. These things can't be hard-coded because they are data. Not data that the member would see, but data that the client, the club owner or manager would see! Once again, the form fields are data!

Same with the reporting tool! Each client will have their own idea of what data they want their employees or customers to be able to enter. Therefore, you absolutely must solve the problem once and only once! Otherwise, you'll be scrambling to reinvent the wheel for each new customer that knocks on the door.

I'm going to continue to write more on this subject, as I feel that the concept of a model generated view, view view, or whatever I decide to call it, is oftentimes overlooked.

Labels:

Frameworks,

Java,

MVC,

Problem Solving,

Struts

Wednesday, March 19, 2008

Technical Customer Service

Quoted from Joel On Software - Some interesting jobs:

"My pet theory is that if the person who takes the call when a customer is missing, say, the Pear Mail module, if this person is the same person who maintains the setup code, then they will eventually get sick of sshing into customers' servers and typing "pear install Mail" for them and they'll just fix it in the setup code once and for all. And I think a lot of people would find a job that combines problem solving with new software development is going to be pretty interesting,..." - Joel Spolsky

I agree 100% with Joel's theory on having the software developers also be the customer service department. I don't deal directly with external clients, but I do have to fix problems when things break. As a result, I like to fix them the first time so that I don't have to deal with it again.

It's a beautiful feeling when you can solve a problem the first time for N cases where N -> INFINITY!

As long as the business model empowers software developers to actually implement these solutions, this organizational style will be successful. I feel that I have this level of freedom at my company, and I'm fairly certain that this level of freedom exists at Fog Creek.

"My pet theory is that if the person who takes the call when a customer is missing, say, the Pear Mail module, if this person is the same person who maintains the setup code, then they will eventually get sick of sshing into customers' servers and typing "pear install Mail" for them and they'll just fix it in the setup code once and for all. And I think a lot of people would find a job that combines problem solving with new software development is going to be pretty interesting,..." - Joel Spolsky

I agree 100% with Joel's theory on having the software developers also be the customer service department. I don't deal directly with external clients, but I do have to fix problems when things break. As a result, I like to fix them the first time so that I don't have to deal with it again.

It's a beautiful feeling when you can solve a problem the first time for N cases where N -> INFINITY!

As long as the business model empowers software developers to actually implement these solutions, this organizational style will be successful. I feel that I have this level of freedom at my company, and I'm fairly certain that this level of freedom exists at Fog Creek.

Thursday, March 13, 2008

Multiple HTML Reply Signatures for Google Apps

Gmail HTML Reply Signatures Greasemonkey Script

The company I work for uses Gmail for email communications. Specifically, the service the company uses is part of the Google Apps bundle of services, and it's the same service that I use for my blog email.

As many of you may know, custom signatures through Gmail can't contain HTML by default. However, HTML Multiple Reply Signatures for Gmail solves this by using the Greasemonkey engine to inject HTML into the page. The HTML Multiple Reply Signatures Script (and Firefox Extension) injects a drop down list to the left of the Gmail editor where a user can select from up to four customized HTML signatures, which will be injected into the Gmail editor.

History of Gmail HTML Multiple Reply Signatures

About a year ago, I integrated the HTML Reply Signatures script into our company's global Windows profile. Since the global profile was shared across most of the company workstations, I created a DOS batch script that took the user's Windows login details from a workstation PC and generated the Greasemonkey script using this information. The generated script on each workstation is exactly the same, except for the filename of the signature image to use. The constraint is that all of the images must use a standard naming convention and all be located on the same public server.

Reliable Gmail HTML Signatures Solution

This solution has worked out quite well. It has been very stable and reliable in the last year and has required absolutely zero maintenance. Now that we have a need for certain people to have more than one signature card, I suggested that one of our managers install the HTML Multiple Reply Signatures Greasemonkey script. So far, he's pretty satisfied with it.

Not being on the global profile made this much easier; otherwise, I would need to write a new batch script that generates the HTML Multiple Reply signatures script instead of the HTML Reply Signatures script, which can only handle injecting one signature. In addition, not being on the global profile means that he could essentially name the image files whatever he wants, as long as he modifies the signature HTML in the script to point to the correct filenames.

I recommended the script instead of the Firefox Extension for three reasons:

- The script is actually more reliable and bug-free than the Firefox Extension.

- This particular manager is technically adept and fully capable of modifying the script himself to configure new signatures.

- Google Apps Gmail is not using Gmail's New Interface, so it has not been susceptible to the same bugs that standard Gmail users have faced.

New version of Gmail

Once Gmail moves these customers to the new version, we're likely to see problems. I wonder why they haven't done this yet. The bigger question is, with my organization's growing use of this particular tool, should we prepare for the change by using a plug-in that supports the new interface?

At any rate, it was cool to see the script being used in my own organization! It may be a good idea to seriously consider moving the script to Gmail's Greasemonkey API to support the new interface.

Labels:

Business,

Firefox development,

Gmail,

Greasemonkey,

HTML Signatures

Wednesday, March 5, 2008

500 Gigabytes of Relief

Backup Humor

I just bought a Seagate OneTouch 4 Maxtor 500GB external hard drive. The hard drive is marketed for backup purposes and comes with the backup software installed on the hard drive. Below is a note included in the instructions for step 2:

Note: It is highly recommended that you copy the current contents of the OneTouch 4 to your computer before proceeding. Reference Seagate Knowledge Base article 4169 for more information.

It doesn't inspire confidence to know that I have to backup the software on the backup drive in case something goes horribly wrong. If the unthinkable does happen, the backup software is the least of my worries. I'm not too concerned. The really important things will be backed up on a CD or a DVD.

Solution to my Software Backup Problems

Having this hard drive will solve a big problem that I have. Lack of space. With two Linux installations, Windows XP, and several Virtual PC images, my 160GB internal hard drive has reached capacity.

This has led to many other problems that all stem from lack of space. I want to try more distributions of Linux, but I have so much data spread out on different partitions that I was afraid I might lose something important if I tried to install the latest version of Ubuntu or SUSE. Now I am free to proceed with an upgrade.

I also wanted to be able to convert my Virtual PC images to VMWare as part of the Microsoft Quit Date. This has not been going as well as I've planned, but it hasn't been going bad.

I am using the Mozilla Thunderbird Extension Lightning for my Calendar application; however, I'm still using MS Outlook for email. If I can get a solid Linux distribution running then this will help reduce my dependence. At the moment, Pandora is the only music that I have in Linux. I had MP3 support briefly, but for some reason G-Streamer is complaining about missing something.

Don't get me wrong, I like troubleshooting broken software, but not my music player. I just want that to work. I don't care why it broke, or why Novell didn't include it by default, after fixing it once and having it break, I am at a point where I just want to hear music without having to read a bunch of knowledge base articles.

However, overlooking media player issues, there are a ton of advantages to using Linux. As a programmer, it is 10 times easier to get things done. Web programming isn't the same when loading a local file in the browser. You're not using http when you do this, you're using the file protocol. AJAX, as well as other techniques, behave completely different under this scheme. To get an accurate idea of what a JavaScript library or technique will do when served on a web server, you need a development platform that mirrors this environment. In Linux, I can configure Apache, PHP, even Java's Tomcat servlet container, in under 30 minutes. In Windows, I'm not as confident.

I can also use tools like grep, vi, and locate in Linux. In Windows, I am lost without these. The cute puppy that appears in Windows Search is cool and all, but I don't have all day to search for a string in a file in the file system, I just want results. Grep gives almost instant results. Sure, there's no puppy, as my 7 year old nephew would say, but it's fast.

Little by little, I have been moving data to the hard drive. In the next couple weeks, I hope to have SUSE 10.3 installed on a partition. I might also install Ubuntu. We'll see what happens.

Oh, and in case something happens to the hard drive, don't worry! I've backed up the backup software on my computer. Now you can sleep at night.

UPDATE (7/5/2008): I installed Ubuntu 8.04 last month and love it! I also share a VMWare Image with Windows using VMPlayer on Ubuntu, which is a great alternative and enhancement to dual-boot setups.

Labels:

Cross-platform,

Data Loss Prevention,

Linux,

Operating systems,

Windows

Saturday, January 12, 2008

Data Loss Prevention Tips

Oops!

That's the sound of the second most common type of data loss! We've all done it at one point or another. No matter what industry you are in, whether it be software development, information technology, automotive repair, or homemaking, you've most likely experienced some form of data loss caused by some form of human error.